FetchAid: Making Parcel Lockers More Accessible to Blind and Low Vision People With Deep-learning Enhanced Touchscreen Guidance, Error-Recovery Mechanism, and AR-based Search Support

Abstract

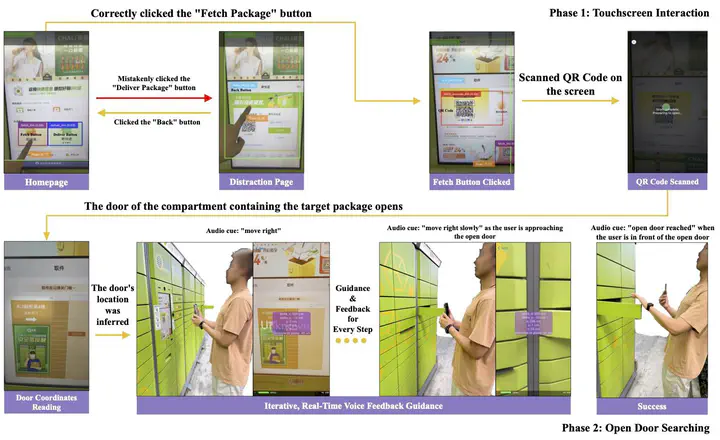

Parcel lockers have become an increasingly prevalent last-mile delivery method. Yet, a recent study revealed its accessibility challenges to blind and low-vision people (BLV). Informed by the study, we designed FetchAid, a standalone intelligent mobile app assisting BLV in using a parcel locker in real-time by integrating computer vision and augmented reality (AR) technologies. FetchAid first uses a deep network to detect the user’s fingertip and relevant buttons on the touch screen of the parcel locker to guide the user to reveal and scan the QR code to open the target compartment door and then guide the user to reach the door safely with AR-based context-aware audio feedback. Moreover, FetchAid provides an error-recovery mechanism and real-time feedback to keep the user on track. We show that FetchAid substantially improved task accomplishment and efficiency, and reduced frustration and overall effort in a study with 12 BLV participants, regardless of their vision conditions and previous experience.