Human-AI Collaboration for UX Evaluation: Effects of Explanation and Synchronization

Abstract

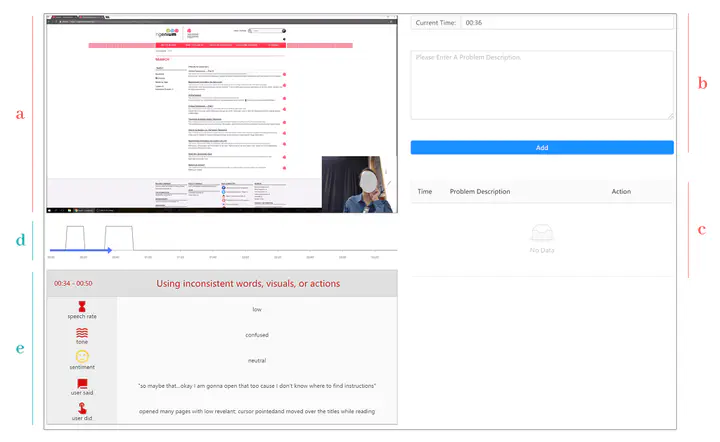

Analyzing usability test videos is arduous. Although recent research showed the promise of AI in assisting with such tasks, it remains largely unknown how AI should be designed to facilitate effective collaboration between user experience (UX) evaluators and AI. Inspired by the concepts of agency and work context in human and AI collaboration literature, we studied two corresponding design factors for AI-assisted UX evaluation: explanations and synchronization. Explanations allow AI to further inform humans how it identifies UX problems from a usability test session; synchronization refers to the two ways humans and AI collaborate: synchronously and asynchronously. We iteratively designed a tool—AI Assistant—with four versions of UIs corresponding to the two levels of explanations (with/without) and synchronization (sync/async). By adopting a hybrid wizard-of-oz approach to simulating an AI with reasonable performance, we conducted a mixed-method study with 24 UX evaluators identifying UX problems from usability test videos using AI Assistant. Our quantitative and qualitative results show that AI with explanations, regardless of being presented synchronously or asynchronously, provided better support for UX evaluators’ analysis and was perceived more positively; when without explanations, synchronous AI better improved UX evaluators’ performance and engagement compared to the asynchronous AI. Lastly, we present the design implications for AI-assisted UX evaluation and facilitating more effective human-AI collaboration.