Eyelid Gestures on Mobile Devices for People with Motor Impairments

Credit: Getty Images

Credit: Getty ImagesAbstract

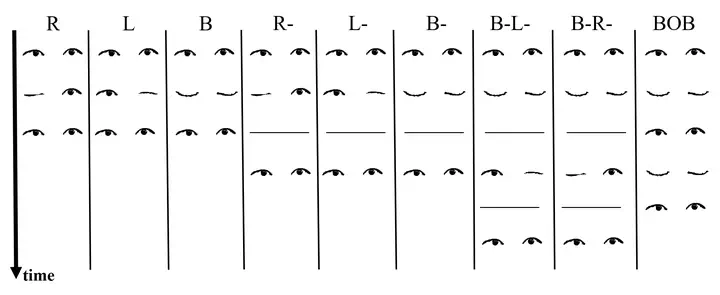

Eye-based interactions for people with motor impairments have often used clunky or specialized equipment (e.g., eye-trackers with non-mobile computers) and primarily focused on gaze and blinks. However, two eyelids can open and close for different duration in different orders to form various eyelid gestures. We take a first step to design, detect, and evaluate a set of eyelid gestures for people with motor impairments on mobile devices. We present an algorithm to detect nine eyelid gestures on smartphones in realtime and evaluate it with twelve able-bodied people and four people with severe motor impairments in two studies. The results of the study with people with motor-impairments show that the algorithm can detect the gestures with .76 and .69 overall accuracy in userdependent and user-independent evaluations. Moreover, we design and evaluate a gesture mapping scheme allowing for navigating mobile applications only using eyelid gestures. Finally, we present recommendations for designing and using eyelid gestures for people with motor impairments.